Back to recipes

Prompt Chaining

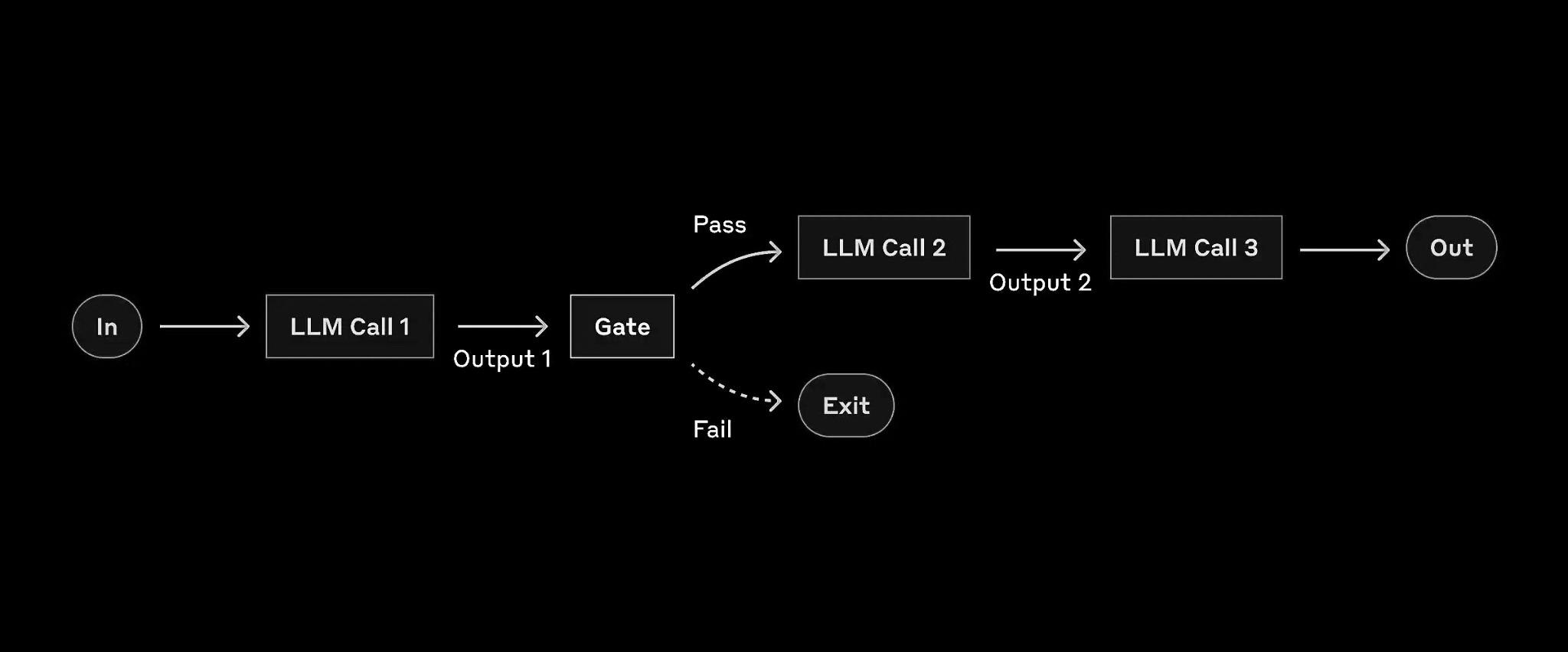

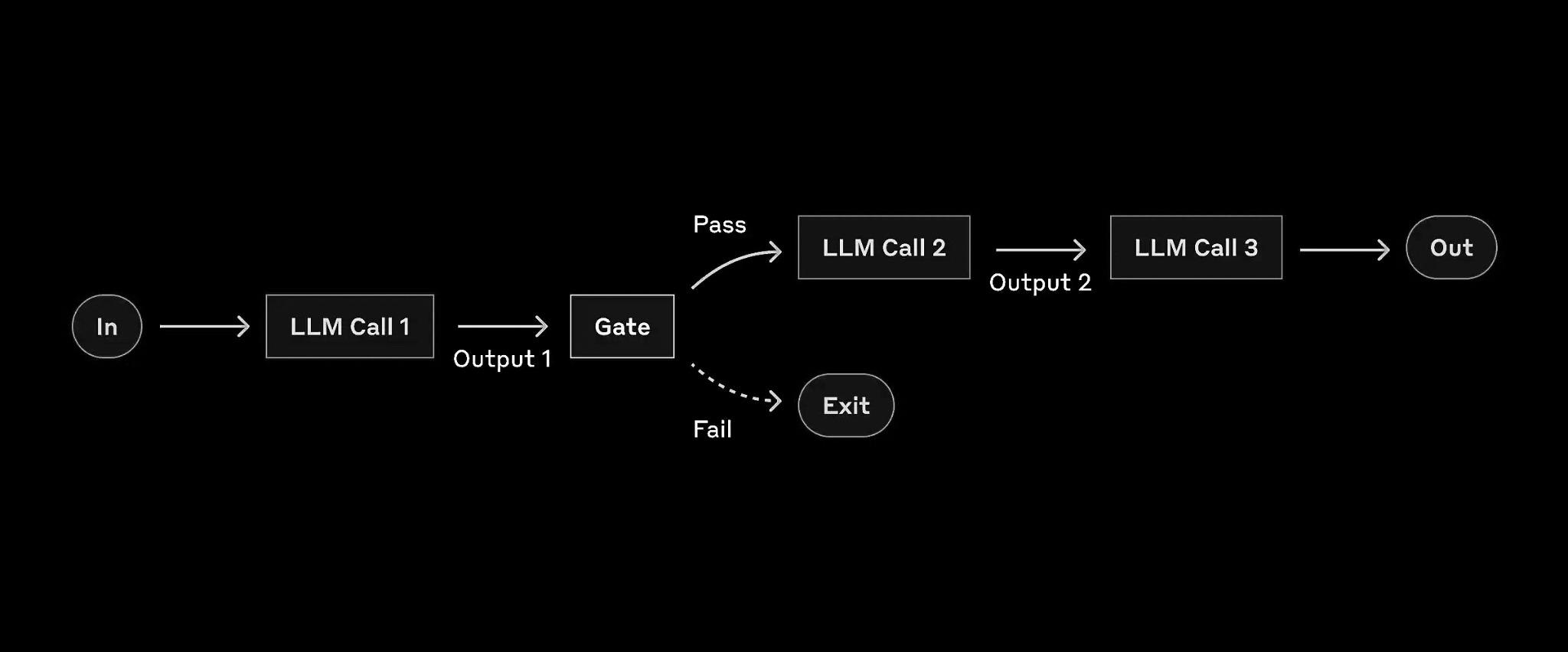

Workflow

Prompt Chaining is a sophisticated workflow that breaks down complex tasks into a series of smaller, manageable steps. Each step in the chain uses the output from the previous step as its input, creating a sequential and refined process.

Diagram Explanation

In the diagram, you can see how the workflow progresses from left to right. Each block represents an LLM call, with arrows indicating the flow of information. The first LLM generates initial content based on the input. This output is then passed to the second LLM, which refines or builds upon it. Finally, the third LLM produces the final output.

Use Cases

- Content Creation Pipeline: Generate an article outline, expand it into a draft, then polish it into a final version.

- Multi-step Problem Solving: Break down a complex math problem, solve each part sequentially, then combine the results.

- Language Translation with Refinement: Translate text to an intermediate language, then to the target language, and finally refine for cultural nuances.

Implementation

from typing import List

from helpers import run_llm

def serial_chain_workflow(input_query: str, prompt_chain: List[str]) -> List[str]:

"""Run a serial chain of LLM calls to address the `input_query`

using a list of prompts specified in `prompt_chain`.

"""

response_chain = []

response = input_query

for i, prompt in enumerate(prompt_chain):

print(f"Step {i+1}")

response = run_llm(

f"{prompt}\nInput:\n{response}",

model='meta-llama/Meta-Llama-3.1-70B-Instruct-Turbo'

)

response_chain.append(response)

print(f"{response}\n")

return response_chain

# Example

question = "Sally earns $12 an hour for babysitting. Yesterday, she just did 50 minutes of babysitting. How much did she earn?"

prompt_chain = [

"""Given the math problem, ONLY extract any relevant numerical information and how it can be used.""",

"""Given the numberical information extracted, ONLY express the steps you would take to solve the problem.""",

"""Given the steps, express the final answer to the problem."""

]

responses = serial_chain_workflow(question, prompt_chain)

final_answer = responses[-1]